First time i hear this, since when?

Dunno when. I think old feature before hardware virtualization was invented? The when isn’t important.

https://docs.oracle.com/en/virtualization/virtualbox/6.0/user/features-overview.html

No hardware virtualization required. For many scenarios, Oracle VM VirtualBox does not require the processor features built into current hardware, such as Intel VT-x or AMD-V. As opposed to many other virtualization solutions, you can therefore use Oracle VM VirtualBox even on older hardware where these features are not present. See Hardware vs. Software Virtualization.

Compare the xml files of VMs that you can use versus not use.

QEMU would probably work but unsupported.

related:

Not sure how that works.

https://docs.oracle.com/en/virtualization/virtualbox/6.0/admin/hwvirt.html

Oracle VM VirtualBox’s 64-bit guest and multiprocessing (SMP) support both require hardware virtualization to be enabled. This is not much of a limitation since the vast majority of 64-bit and multicore CPUs ship with hardware virtualization. The exceptions to this rule are some legacy Intel and AMD CPUs.

Since Whonix downloadable images are for 64-bit (32-bit or 64-bit?), this shouldn’t work. But since @nurmagoz confirmed VirtualBox works, seems VirtualBox has somewhat better out of the box legacy hardware support.

Figured out this issue, this is seems to be Debian (or Kernel) VS my PC issue:

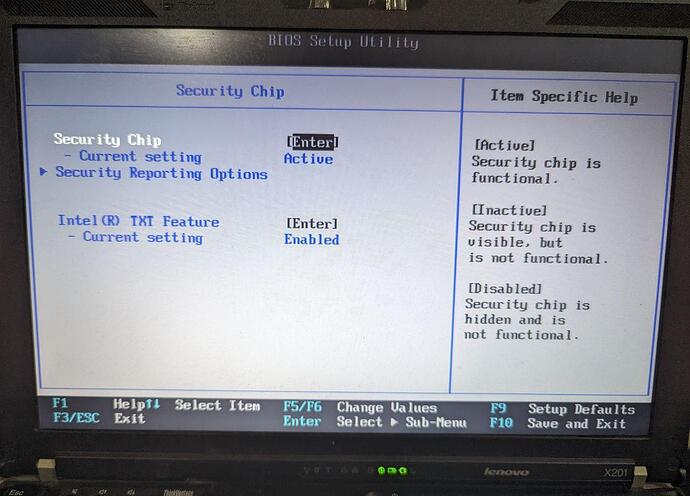

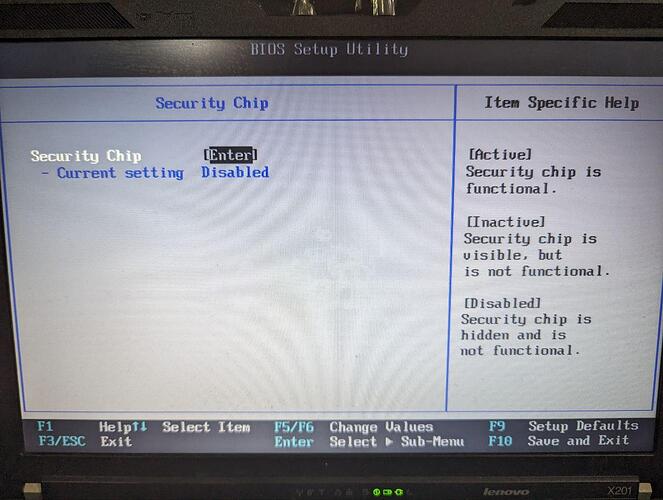

If TPM activated from the BIOS:

It will appear at the beginning of the OS booting (quickly disappear):

kernel: x86/cpu: VMX (outside TXT) disabled by BIOS

To solve it one need to disable TPM feature:

Dunno if this is reported upstream or not.

Can you test some KVM with EFI please? @HulaHoop

KVM EFI support might have considerably improved meanwhile. For example, Debian nowadays can be easily installed on EFI and even SecureBoot enabled systems.

Therefore would be good to test both, EFI and SecureBoot.

Then making any changes to the Whonix libvirt KVM config files to support EFI, SecureBoot.

Yet to be decided if EFI (and maybe later SecureBoot) will become the new default for Whonix VMs as per:

If there is anything that needs help with or requires testing please do let me know

Btw please Follow Whonix Developments for news. If there are major testers wanted announcements, these will be posted in the news forums.

Could vagrant be a solution to the missing .ova appliance feature for libvirt / KVM? Vagrant supports .box files.

vagrant is available in Debian.

https://wiki.debian.org/Vagrant

Supports libvirt. Package in Debian:

Contains:

-

/usr/share/doc/vagrant-libvirt/examples/create_box.sh

/usr/share/doc/vagrant-libvirt/examples/create_box.sh IMAGE [BOX] [Vagrantfile.add]

Package a qcow2 image into a vagrant-libvirt reusable box

Needs…?

metadata.jsonVagrantfile

I am not sure Vagrant can be feed existing libvirt XML files or needs its own format?

But there could be limitations.

please review:

KVM: Difference between revisions - Whonix

Long standing KVM issue:

VM internal traffic is visible on the host for network sniffers such as wireshark, tshark as well as iptables (and therefore by extension also corridor)

This is an issue because of this corridor (or something similar if invented such as perhaps Whonix-Host KVM Firewall) cannot be used as an additional leak test or Tor whitelisting gateway.

Quote myself from Whonix on Mac M1 (ARM) - Development Discussion - #35 by Patrick

As hubport option might be much more secure similar and also apply to Whonix KVM.

This is very important, needs most attention to get right to avoid IP leaks.

From the UTM config files. Relevant options:

Whonix-Gateway

-device virtio-net-pci,netdev=external -device virtio-net-pci,netdev=internal -netdev user,id=external,ipv6=off,net=10.0.2.0/24 -netdev socket,id=internal,listen=:8010Whonix-Workstation

-device virtio-net-pci,netdev=internal -netdev socket,id=internal,connect=127.0.0.1:8010Doesn’t look crazy. Related documentation:

Documentation/Networking - QEMUBut it has the same issue that KVM has. VM internal traffic is visible on the host for network sniffers such as wireshark, tshark.

This has lead in the past to a failure of configuring corridor on a Debian host with Whonix KVM.

references:

GitHub - rustybird/corridor: Tor traffic whitelisting gateway

testing on Debian host · Issue #28 · rustybird/corridor · GitHub

related:

Using corridor, a Tor traffic whitelisting gateway with Whonix ™So it would be much better if KVM / QEMU (UTM) would hide this from the host operating system. I.e. encapsulate the internal networking better. ChatGPT says this is possible using the

hubportoption but ChatGPT unfortunately sometimes talkes nonsense. Could you look into it please?

Pending changes piling up.

Missing documentation:

As mentioned in How to use Whonix-Gateway KVM for any other VM, operating system (Whonix-Custom-Workstation)? using Anonymize Other Operating Systems is undocumented for Whonix KVM.

@Patrick please check inbox

If the user is still resorting to the commandline at any point in the import process then no. Even if the number of commands is cut down, the overhead of maintaining another abstraction layer isn’t justified IMO unless the UX is significantly better.

I’m not sure what the implications are exactly of the change, but I’d reckon that piping guest traffic through localhost is a breakdown of the security guarantees of IP isolation and having it on its own private subnet. Unless there’s a precedent of this being done on other virtualizer platforms that I’m not aware of (and having been successfully leaktested of course), I’d steer clear.

That is the issue now with Whonix KVM…

Whonix KVM at time of writing:

- VM traffic visible on the host: yes

- Is that a problem? yes

- What is broken? corridor; and host VPN fail closed mechanisms.

- Potential solution, in theory:

hubport

Whonix VirtualBox / Qubes-Whonix at time of writing:

- VM traffic visible on the host: no

- Is that a problem? no

- What is broken? Nothing.

- Leak tested: yes

No, that’s not what I’m seeing in my VM qemu log found in /var/log/libvirt/qemu. There’s no mention of my NIC listening on localhost just the spice server

What is UTM?

UTM is a full featured system emulator and virtual machine host for iOS and macOS. It is based off of QEMU. In short, it allows you to run Windows, Linux, and more on your Mac, iPhone, and iPad. Check out the links on the left for more information.

The way this MacOS based emulator chooses to manipulate QEMU in order to simulate the virtual environment is quite different to how KVM uses QEMU on Linux. I think this is a case of ChatGPT taking us for a ride.

The log is irrelevant. It’s not a listen port. It’s the the virtual network interfaces created by KVM on the host operating system.

VM internal traffic is visible on the host for network sniffers such as wireshark, tshark as well as iptables (and therefore by extension also corridor).

… as we’ve wondered years ago probably and as you’ve seen years ago in tshark but finding that message would be challenging.

This is why firewall rules on the host (for example by corridor or VPN fail closed firewalls) can break Whonix-Workstation traffic.

These so far are the facts and these are unrelated to ChatGPT.

UTM is also irrelevant.

libvirt is a wrapper around QEMU. virsh domxml-to-native can the translate an XML file to the actual QEMU comnand line that will be executed.

(Audit Output of virsh domxml-to-native)

So if QEMU supports hubport, it can be assumed that such an important option is also available in libvirt.

(In theory, very recently added or obscure QEMU options might not exist in libvirt.)

In the worst case, libvirt: QEMU command-line passthrough could be used.

KVM is also just a QEMU command line. Just with different command line options. From perspective of the final and actually executed QEMU command line the difference is just a few command line parameters.

The problem is this:

<bridge name='virbr2' stp='on' delay='0'/>

The bridge virbr2 will be visible as a network interface on the host operating system for tools such as sudo ifconfig. This is the whole crux about Whonix KVM.

Avoiding this would make a lot issues vanish.

So the easy one first… Currently:

<network>

<name>Whonix-External</name>

<forward mode='nat'/>

<bridge name='virbr1' stp='on' delay='0'/>

<ip address='10.0.2.2' netmask='255.255.255.0'/>

</network>

Is <bridge name='virbr1' stp='on' delay='0'/> strictly required? According to https://chat.openai.com/share/5d7c6ee9-a1ea-459f-9b50-19f2f03fe2a4 it is not.

Could you try without <bridge name='virbr1' stp='on' delay='0'/> please? Potential alternative:

<network>

<name>Whonix-External</name>

<forward mode='nat'/>

<ip address='10.0.2.2' netmask='255.255.255.0'/>

</network>

If that does not work, any other options?

This one maybe harder… Currently:

<network>

<name>Whonix-Internal</name>

<bridge name='virbr2' stp='on' delay='0'/>

</network>

Isolated mode perhaps?

I did not find how to do this.

Guess based on ChatGPT… Would this work? Potential alternative:

<network>

<name>Whonix-Internal</name>

<ip address='10.152.152.0' netmask='255.255.255.0'>

</ip>

</network>

Increase KVM GW rams to 2 GB because on CLI first start there is not internet connect going to be (something doesnt run) , and 4 CPU (similar to vbox)