Depends on if and what the vm writes to the host.

I don’t know if there is a official definition regarding amnesia for live systems. Imho, the most important part is that the base system remains untouched and writes go to RAM. More or less each live iso does that. The other part is wiping of RAM when the machine gets shut down (afaik only implemented by tails).

I’m thinking mostly about persistent malware and to a smaller extend about reading out RAM.

I’m certain tails implemented the RAM wipe for a reason, but I still consider cold boot attacks rather unlikely. I did not yet see such a attack in the wild and if someone gets hold of your machine while it’s running he can read out RAM anyways. But I guess a RAM wipe could also be implemented.

If, as HulaHoop mentions, RAM is zeroed when not in use anymore then it also would not matter. More research on this is required.

Of course if you want complete amnesia then the live mode should be implemented on the host. I don’t see a reason why this would not work on e.g. a qubes or a non-qubes host. Qubes already uses dracut and 3.2 also have a live iso. Some hardware with correctly implemented write protection switch would be needed if you want to be really sure that nothing gets written to the disk.

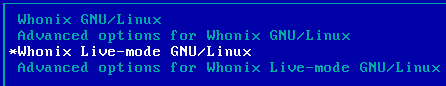

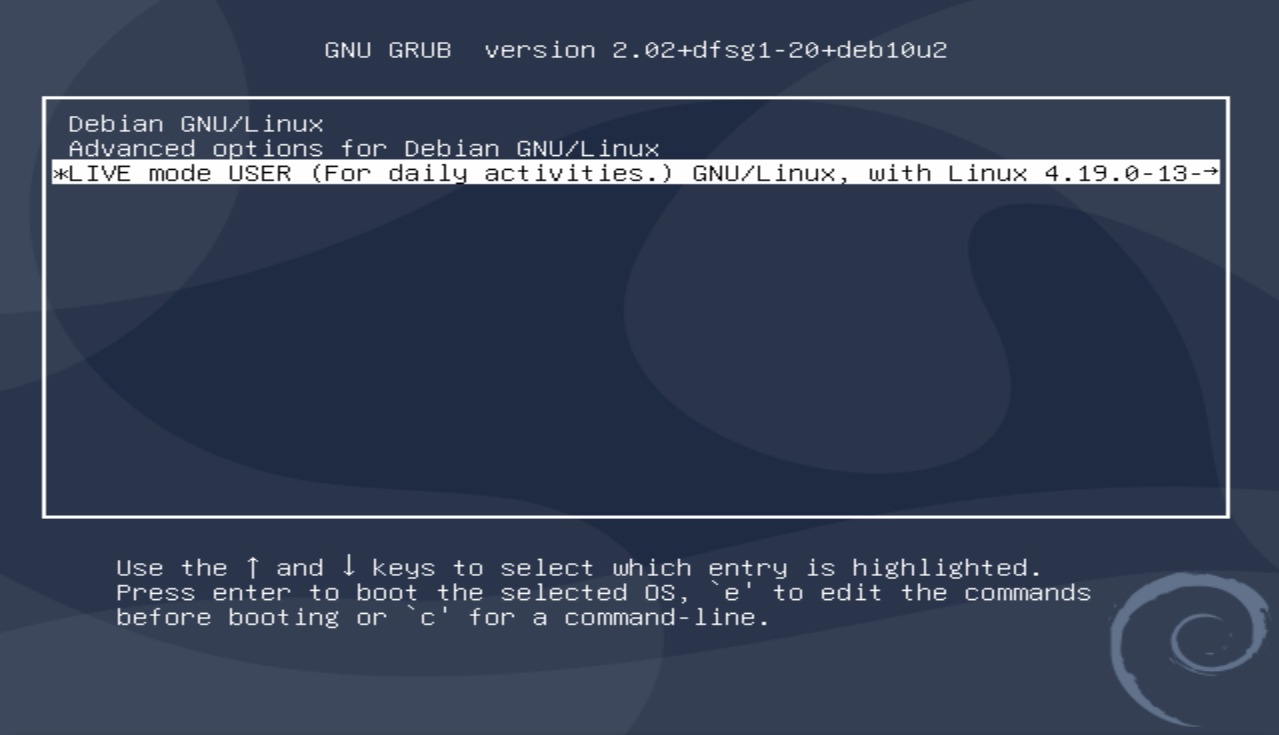

I will certainly take a closer look at this and do some testing + wiki editing. I’m confident that the live-mode can be implemented.

What you quoted is not directly related to that but could also be implemented. Dracut uses device mapper snapshots (or, if you want, overlayfs in newer versions). It is not possible to get memory back once some file was written there even if you delete the file. I does not work like tmpfs.

If you want to test it just boot the live vm with 1-2 GB RAM and then create a large file, say 500 MB. Delete it, create it again, delete it … . At some point the snapshot will overflow and the system will crash or become read only.

I was under the impression that thin snapshots would be the solution but either I was wrong or there are some bugs.

Using overlayfs would be a solution too but only when used for the virtual machines. On the host you would require large amount of RAM since overlayfs copies everything to RAM when it does some changes i.e. also big vm disk images. I’m also not sure how mature overlayfs in dracut is at the moment. It is also not in the dracut version for stretch.

Live mode and in-RAM boot are not the same. Live mode is basically not making writes to the underlying system. In-RAM boot = live mode + copying the whole filesystem to RAM. Copying to RAM takes a while depending on what you boot from but after it is finished the system will be really fast. You can then also remove the usb stick, disc, dvd and it will continue to run. You can also have this with dracut, just append rd.live.ram. I did not test it with the live mode approach described here since RAM requirements would be even bigger (we don’t boot from a compressed filesystem as e.g. a normal live iso).

A live iso for the whonix vms would also be possible, I did this before with the normal debian live stuff but this would also work with dracut. It is not that easy to update an iso, however

Sort of true. Ext3 and ext4 have e.g. journaling therefore this applies: GitHub - msuhanov/Linux-write-blocker: The kernel patch and userspace tools to enable Linux software write blocking

When you use the system in the way as described on the rpi forum you will see that the hash sum of the vm images changes except if you use ext2. This did not happen to me with dracut + device mapper even with ext3 or ext4 under normal usage but after a VM crash the value was also different. If you make the vm read only via the vm software this should not happen and also did not happen in the many cases I tested it.

Also some malware could mount the disk rw when there is no write protection via the vm software. So I think you need to break out of the vm to make the image rw.

@Hexagon

I was also thinking about a (switchable) live host os but this requires some broader discussion (going to start a thread …) e.g. what OS to use, some kind of installation tutorial or just a usb image … . The latter one would probably be the easiest for novice end users. Also qubes is of course an option.

Quite a while back I tested some whonix ws + gw on a debian host iso. Everything fit on a normal DVD.

In the end, the question is what kind of live system is desired (iso or uncompressed/plain filesystem). Imho, the most useful option would the system described here (so no iso) since you can have FDE + updates. You can have FDE for iso based systems too but it is not that useful. Hardware write protection for the host os is the only bigger issue I see at the moment.